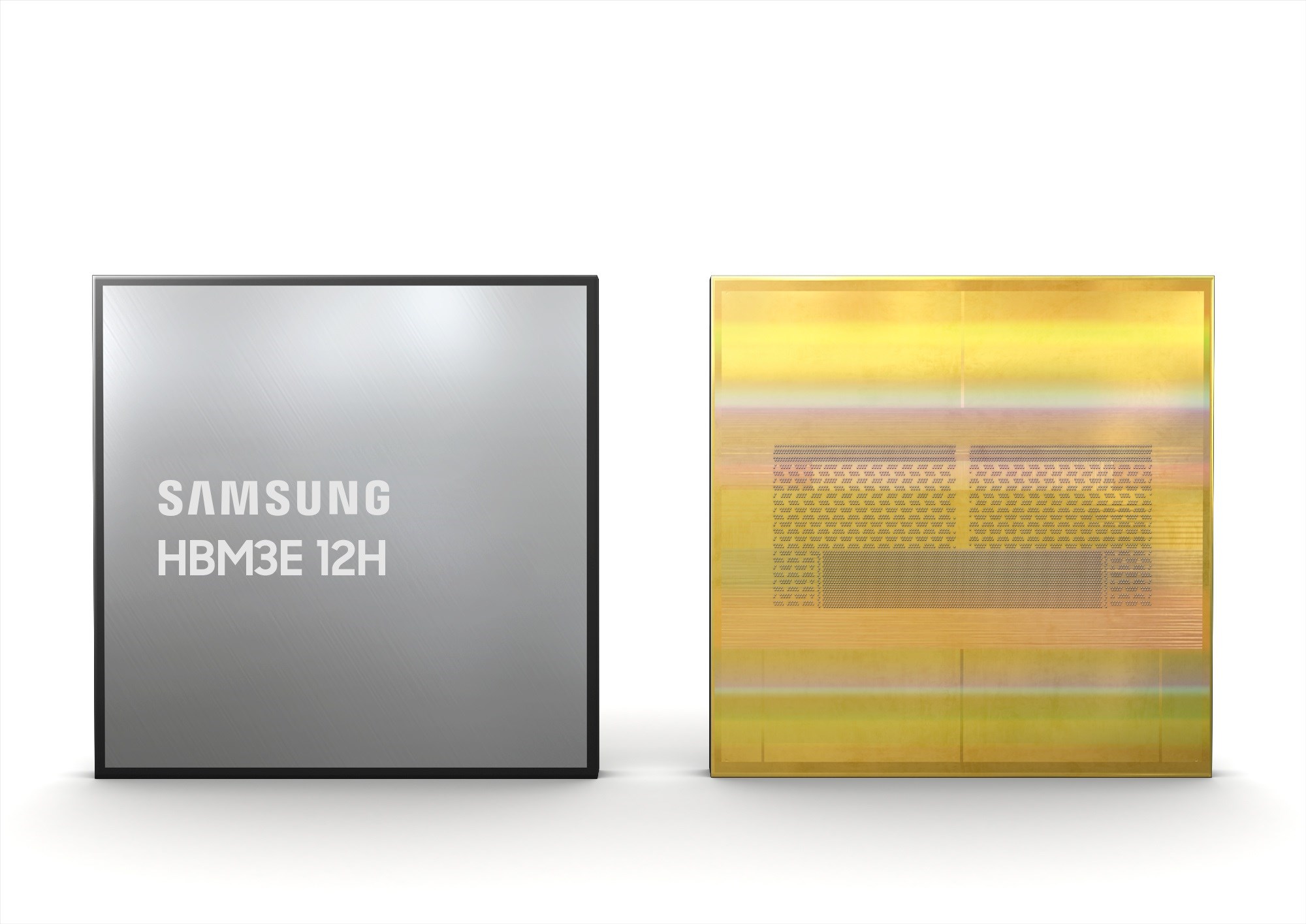

Samsung Unveils 36 GB HBM3E 12H Memory

HBM memories consist of multiple DRAM modules stacked vertically, with each module referred to as a stack or layer. Samsung’s new HBM3E 12H module has twelve DRAM modules, each with a capacity of 24 gigabits (Gb), equivalent to 3 gigabytes (GB). This adds up to a total capacity of 36 GB.

Samsung applied TC NCF (thermal compression non-conductive film) technology to ensure that the 12-layer HBM3E meets the same height requirements as the 8-layer ones. This reduces the gap between the stacks to just a few micrometers. Samsung states that the 12-stack HBM3E is over 20% denser vertically compared to the 8-stack HBM3.

In terms of performance, Samsung claims that HBM3E 12H accelerates average AI training speeds by 34% and increases the concurrent user count for inference services by 11.5 times compared to HBM3 8H. Samsung has already started providing samples of HBM3E 12H to customers and aims to begin mass production in the second half of this year. These memory modules will particularly be used in AI GPUs, giving Samsung a competitive edge over SK Hynix and Micron.